The Algorithm Design Manual is divided into two parts, where the first part covers an introduction to algorithm design and analysis, and the second part contains a catalog of the 75 most important problems arising in practice. This review focuses on the first part.

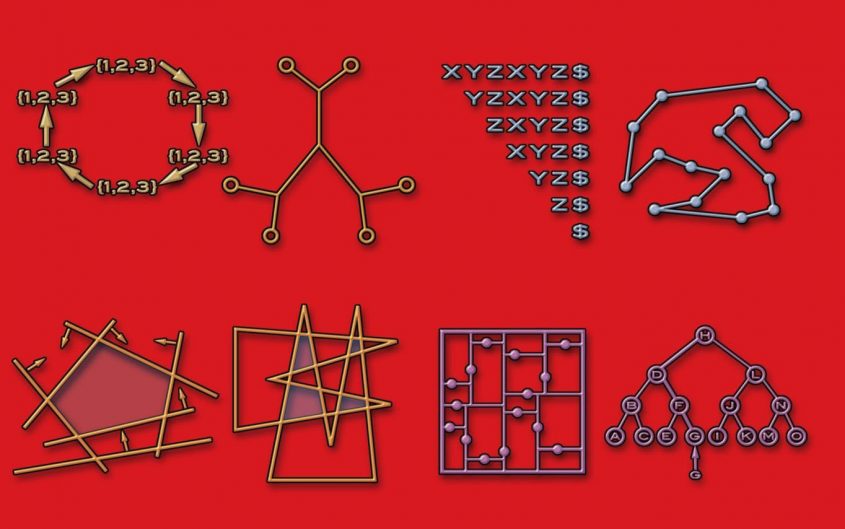

The first part thoroughly introduces the common data structures involved in most algorithmic problems. These algorithmic problems (sorting, searching, graphs, heuristics, etc.) are also discussed in more detail, where the typical algorithm solutions are explored.

As a software developer you should be comfortable with these data structures and algorithms, at least the most common ones. However, there were a few other things that I thought were interesting and important. Firstly, there is the topic of correct versus good enough, which is an important topic for many aspects of software development. When you encounter a complex problem that requires an algorithm, you should ask yourself if you really need the algorithm to be correct all the time. If it is ok to sometimes get an answer that’s not the exact correct, but close, you can make a lot of neat optimizations. On top of this, a lot of intractable problems suddenly becomes feasible. So keep this in mind!

One related technique is simulated annealing, which approximates the global optimum in a set. It is very useful, because it allows the exploration of nodes with worse values, which allows the algorithm to escape local optimums. However, the algorithms likeliness to explore worse options decreases as the search progresses (temperature decreases). The origin of the name comes from metallurgy, where a metal slowly cools down (annealing).

There were a few things that bugged me while reading this books. I think the biggest problem is that there almost always seemed to be a lot of room for improvement of the book. I read the second edition, which seems more like a first draft than a finished book. The explanations are sub-par, and the code quality is poor, which is just unacceptable considering this is the second edition of the book and the author had 11 years to improve it.

Factually, it may be worth a second edition, but the author should definitely spent more time improving the explanations and readability of the code. The book really seems to be written by a stereotypical algorithm programmer, which sacrifices every part of readability in favor of miniscule speed improvements (either speed of the algorithm or speed for the author to write).

The code quality in terms of readability was horrible at worst ant decent at best, which just seems extremely lazy. If you are writing a book about programming, why not spend a tiny amount of extra effort to make it easier for the reader. Is it really necessary to use variable names such as a, b, c, x, and y, when there are plenty of logical names available if you just think about what the variable represents? Considering this is not a first edition of the book, I find it totally unacceptable and negligent of the author to have such poor code quality throughout the book.

The second part is meant to be used in a different way, as it is a catalog of the most important algorithmic problems arising in practice. I have only quickly skimmed this part, so I can’t tell you anything about the quality of the content. However, this part seems to be the strength of the book, where practitioners can identify what the problem they have encountered is called, what is known about it, and how they should proceed if they need to solve it. I will certainly try this out the next time I am faced with a problem like this.

Overall, I did not like the book too much, as I think there are numerous websites and book which explain things in an easier way. That said, I have higher hopes for the second part, which I will try to use in the future.